For the past 30 or so years, if you wanted to proxy traffic to your web server, there were two, sometimes three, primary applications you reached for. Apache httpd, nginx, and HAProxy.

Apache httpd is one of the first web servers created, and is currently one of the most popular in use. While primarily used to serve web traffic, httpd can also be used to proxy traffic with the mod_proxy module. In addition to acting as a simple proxy, mod_proxy can also cache traffic, allowing for significant latency reduction for clients.

HAProxy is another option for proxying traffic. HAProxy tends to be deployed in load balancing scenarios and not single-server proxy situations. It is used by a number of high profile websites due to its speed and efficiency.

Finally, nginx is the third proxying solution. In addition to proxying, nginx can also serve many of the same roles as Apache httpd including local balancing, caching, and traditional web serving. Like haproxy, nginx is known for more efficient memory usage as compared to Apache httpd.

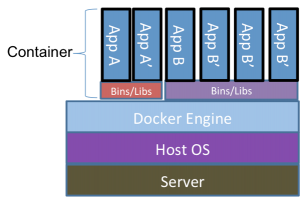

These solutions are battle hardened and have worked well for many years. But as technology changes, it often requires changes in the tools we use. With the advent of containerization, the tools we use should be re-examined and we should determine if we still have the best tools for the job.

Both nginx and haproxy were the primary choices used for proxying with containers. As the technology matured, however, new tools were created to take advantage of new features. One of those tools is Traefik.

Traefik is, according to their marketing, a “cloud native edge router.” If we tear apart the marketing speak, it’s basically a proxy built with containers in mind. It’s written in Go, and it has quite a few tricks up its sleeve.

You can use Traefik in the same general context as any of the other proxies, deploying it on a normal server and using a static configuration. Even deployed like this, the configuration is quite straightforward and very flexible.

But the real power of Traefik comes when it’s deployed in a container environment. Instead of using a static configuration, Traefik can listen to the docker daemon for specific labels applied to containers. Those labels contain the Traefik configuration for the container they’re applied to. It works similar to the static configuration, but it’s dynamic in nature, configuring Traefik as containers are started and stopped.

The community version of Traefik allows this by directly mounting the docker socket file, which is a bit of a security risk. If this container were to be compromised, an attacker could use the mounted socket to control other containers on the server. So this isn’t a secure way of deploying Traefik.

There are other ways to deploy, however, that make the system inherently more secure. Traefik has released an enterprise version, TraefikEE, that decouples the configuration management piece into its own container. This container also mounts the docker socket file, but has no external ports open, thereby isolating the container. The purpose of this new management container is to read changes in the labels and push the configuration to the primary Traefik container. It also scales, allowing additional Traefik nodes to be added which will receive the configuration from the management container.

Another way to deploy this securely is to use an external storage container for the traefik configuration. Consul is a popular option for this. Consul, amongst other things, is a key-value store. The configuration for Traefik can be stored here and dynamically updated as needed. Traefik will automatically re-read the configuration and adjust accordingly.

There are caveats with this method, however. First, you’ll need a way to get the configuration for the containers into Consul. Fortunately, there’s an open source project that may be able to do this already called registrator.

The registrator project works similar to how the management container works for TraefikEE, though in a more general way. It’s not specifically for Traefik, but intended to register containers with Consul. It’s an isolated container that listens for labels and adds the data to Consul. There are no open ports, reducing the likelihood that this particular container can be compromised and used to attack the rest of the system.

I haven’t tested using registrator in this way, however. (It doesn’t work, see the UPDATE below) I believe it would require modifying the registrator configuration to both register the containers as well as add arbitrary configuration information to Consul. This is something I’ll be investigating later as I learn more about how Consul works.

Another caveat to this approach is that there is no atomic update mechanism in Consul. What this means is that if Consul reads the configuration while it’s being updated by something like registrator, it’s will end up using an incomplete configuration until the next time it reads the configuration. There is a workaround for this in the documentation, but it requires additional programming to put into place.

Despite these few drawbacks, however, Traefik is an amazing tool for proxying in a container environment. And the Containous team is hard at work on version 2 which significantly cleans up and enhances the configuration possible with Traefik as well as adding the ability to add middleware functions that can be used to dynamically alter traffic as it passes through Traefik. Definitely worth checking out when it’s released.

UPDATE: I finally had a chance to do some research on registering services with Consul and inserting data in the to he KV store. Unfortunately, registrator can’t do this job. It only handles creating tags on a Consul entry and not KV entries.

I have been unsuccessful, thus far, in finding a solution for this particular use case. However, I believe that the registrator source code can be updated to allow for this.