Welcome to the obligatory Docker 101 post. Before I dive into more technical posts on this subject, I thought it would be worth the time to explain what docker is and what I find exciting about it. If you’re familiar with Docker already, there likely won’t be anything new here for you, but I welcome any feedback you have.

So, what is Docker? Docker is a containerization technology first release as open source in 2013. But what is containerization? Containerization, or Operating System Level Virtualization, refers to the isolation, using kernel-level features, of a set of processes in which the processes only see a localized view of the system. This differs from Platform Virtualization in that Containerization is not presenting a set of virtual resources to the isolated processes, but is presenting real resources limited only by the configuration of that particular container.

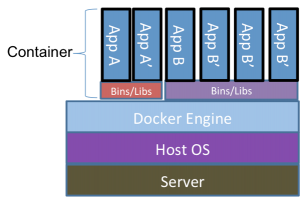

One of the more common explanations of this architecture is shown in the following image:

This image is a bit problematic in that it doesn’t truly represent what you actually see on a docker host, but we’ll save that for a later blog post. For now, trust that the above is a very simplified view of the docker world.

So why containerization and why Docker in particular? There are a number of benefits that containerization technology provides. Among these are immutability, portability, and security. Let’s touch briefly on each of these.

Immutability refers to the concept of something being unchangeable. In the case of containerization, a container is considered to be immutable. That is, once created, the container itself will remain unchanged for the duration of its life. But, it’s important to understand what this means in practice. The container image itself is immutable, but once running, the contents of the container can be changed within the parameters of its execution. The immutable piece of this comes into play when you destroy a running container and recreate it from the container image. That recreated image will have the exact same characteristics as the original container, assuming the same configuration is used to start the container. A notable exception to this is external volumes. Any volume external to the container is not guaranteed to be immutable as it’s not part of the original container image.

Portability refers to the ability to move containers between disparate systems and the container will run exactly the same, assuming no external dependencies. There are limitations to this such as requiring the same cpu architecture across the systems, but overall, a container can be moved from system to system and be expected to behave the same. In fact, this is part of the basis of orchestration and scalability of containers. In the event of a failure, or if additional instances of a container are necessary, they can be spun up on additional systems. And provided any external dependencies are available to all of the systems that the container is spun up on, the containers will run and behave the same.

Containers provide an additional layer of security over traditional virtual or physical hosts. Because the processes are isolated within the container, an attacker is left with a very limited attack surface. In the event of a compromise, the attacker only gets a foothold on that instance of the container and is generally left with very little tooling inside of the container with which to pivot to additional resources. If an attacker is able to make changes to the running container, the admin can simply destroy the container and spin up a new one which will no longer have the compromised changes. Obviously the admin needs to identify how the attacker got in and patch the container, but this ability to destroy and recreate a container is a powerful way to stop attackers from pivoting through your systems.

Finally, the internal networking of the docker system allows containers to run with no externally accessible ports. So, for instance, if you’re running some sort of dynamic site that requires a proxy, application, and database, the system can be set up such that the proxy is the only externally accessible container. All communication between the proxy, application, and database can be performed over the internal docker networking which has no externally accessible endpoint.

There’s a lot to be excited about here. Done correctly, the days of endlessly troubleshooting issues caused by server cruft are over. Deployment of resources because incredibly straightforward and rapid. Rollbacks become vastly simplified as you can just spin up the old version of the container. Containers provide developers a means to run their code locally, exactly as it will be run in production!

I’ve been working with containers for about 3 years now and the landscape just keeps expanding. There’s so much to learn and so many new tools to play with.

Finally, I’m going to leave you with a talk by Alice Goldfuss. Alice is an engineer that currently works for Github. She has a ton of container experience and a lot to say about it. Definitely worth a watch.