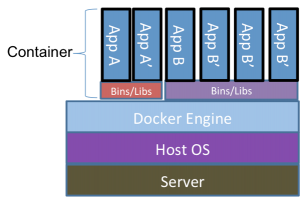

In a previous post I discussed Docker from a high level. In this post, we’ll take a closer look at how processes run in a container and how it differs from the common view of the architecture that is used to explain Docker. Remember this?

The problem with this image, however, is that while it helps conceptualize what we’re talking about, it doesn’t reflect reality. If you listed the processes outside of the container, one might think you’d see the docker daemon running and a bunch of additional processes that represent the containers themselves:

[root@dockerhost ~]# ps -ef UID PID PPID C STIME TTY TIME CMD root 1 0 0 Oct15 ? 00:02:40 /usr/lib/systemd/systemd --switched-root --system --deserialize 21 root 2 0 0 Oct15 ? 00:00:03 [kthreadd] root 3 2 0 Oct15 ? 00:03:44 [ksoftirqd/0] ... root 4000 1 0 Oct15 ? 00:03:44 dockerd root 4353 4000 0 Oct15 ? 00:03:44 myawesomecontainer1 root 4354 4000 0 Oct15 ? 00:03:44 myawesomecontainer2 root 4355 4000 0 Oct15 ? 00:03:44 myawesomecontainer3

And while this might be what you’d expect based on the image above, it does not represent reality. What you’ll actually see is the docker daemon running with a number of additional helper daemons to handle things like networking, and the processes that are running “inside” of the containers like this:

[root@dockerhost ~]# ps -ef UID PID PPID C STIME TTY TIME CMD root 1 0 0 Oct15 ? 00:02:40 /usr/lib/systemd/systemd --switched-root --system --deserialize 21 root 2 0 0 Oct15 ? 00:00:03 [kthreadd] root 3 2 0 Oct15 ? 00:03:44 [ksoftirqd/0] ... root 1514 1 0 Oct15 ? 04:28:40 /usr/bin/dockerd-current --add-runtime docker-runc=/usr/libexec/docker/docker-runc-current --default-runtime=docker-runc --exec-opt nat root 1673 1514 0 Oct15 ? 01:27:08 /usr/bin/docker-containerd-current -l unix:///var/run/docker/libcontainerd/docker-containerd.sock --metrics-interval=0 --start-timeout root 4035 1673 0 Oct31 ? 00:00:07 /usr/bin/docker-containerd-shim-current d548c5b83fa61d8e3bd86ad42a7ffea9b7c86e3f9d8095c1577d3e1270bb9420 /var/run/docker/libcontainerd/ root 4054 4035 0 Oct31 ? 00:01:24 apache2 -DFOREGROUND 33 6281 4054 0 Nov13 ? 00:00:07 apache2 -DFOREGROUND 33 8526 4054 0 Nov16 ? 00:00:03 apache2 -DFOREGROUND 33 24333 4054 0 04:13 ? 00:00:00 apache2 -DFOREGROUND root 28489 1514 0 Oct31 ? 00:00:01 /usr/libexec/docker/docker-proxy-current -proto tcp -host-ip 0.0.0.0 -host-port 443 -container-ip 172.22.0.3 -container-port 443 root 28502 1514 0 Oct31 ? 00:00:01 /usr/libexec/docker/docker-proxy-current -proto tcp -host-ip 0.0.0.0 -host-port 80 -container-ip 172.22.0.3 -container-port 80 33 19216 4054 0 Nov13 ? 00:00:08 apache2 -DFOREGROUND

Without diving too deep into this, the docker processes you see above serve a few processes. There’s the main dockerd process which is responsible for management of docker containers on this host. The containerd processes handle all of the lower level management tasks for the containers themselves. And finally, the docker-proxy processes are responsible for the networking layer between the docker daemon and the host.

You’ll also see a number of apache2 processes mixed in here as well. Those are the processes running within the container, and they look just like regular processes running on a linux system. The key difference is that a number of kernel features are being used to isolate these processes so they are isolated away from the rest of the system. On the docker host you can see them, but when viewing the world from the context of a container, you cannot.

What is this black magic, you ask? Well, it’s primarily two kernel features called Namespaces and cgroups. Let’s take a look at how these work.

Namespaces are essentially internal mapping mechanisms that allow processes to have their own collections of partitioned resources. So, for instance, a process can have a pid namespace allowing that process to start a number of additional processed that can only see each other and not anything outside of the main process that owns the pid namespace. So let’s take a look at our earlier process list example. Inside of a given container you may see this:

[root@dockercontainer ~]# ps -ef UID PID PPID C STIME TTY TIME CMD root 1 0 0 Nov27 ? 00:00:12 apache2 -DFOREGROUND www-data 18 1 0 Nov27 ? 00:00:56 apache2 -DFOREGROUND www-data 20 1 0 Nov27 ? 00:00:24 apache2 -DFOREGROUND www-data 21 1 0 Nov27 ? 00:00:22 apache2 -DFOREGROUND root 559 0 0 14:30 ? 00:00:00 ps -ef

While outside of the container, you’ll see this:

[root@dockerhost ~]# ps -ef UID PID PPID C STIME TTY TIME CMD root 1 0 0 Oct15 ? 00:02:40 /usr/lib/systemd/systemd --switched-root --system --deserialize 21 root 2 0 0 Oct15 ? 00:00:03 [kthreadd] root 3 2 0 Oct15 ? 00:03:44 [ksoftirqd/0] ... root 1514 1 0 Oct15 ? 04:28:40 /usr/bin/dockerd-current --add-runtime docker-runc=/usr/libexec/docker/docker-runc-current --default-runtime=docker-runc --exec-opt nat root 1673 1514 0 Oct15 ? 01:27:08 /usr/bin/docker-containerd-current -l unix:///var/run/docker/libcontainerd/docker-containerd.sock --metrics-interval=0 --start-timeout root 4035 1673 0 Oct31 ? 00:00:07 /usr/bin/docker-containerd-shim-current d548c5b83fa61d8e3bd86ad42a7ffea9b7c86e3f9d8095c1577d3e1270bb9420 /var/run/docker/libcontainerd/ root 4054 4035 0 Oct31 ? 00:01:24 apache2 -DFOREGROUND 33 6281 4054 0 Nov13 ? 00:00:07 apache2 -DFOREGROUND 33 8526 4054 0 Nov16 ? 00:00:03 apache2 -DFOREGROUND 33 24333 4054 0 04:13 ? 00:00:00 apache2 -DFOREGROUND root 28489 1514 0 Oct31 ? 00:00:01 /usr/libexec/docker/docker-proxy-current -proto tcp -host-ip 0.0.0.0 -host-port 443 -container-ip 172.22.0.3 -container-port 443 root 28502 1514 0 Oct31 ? 00:00:01 /usr/libexec/docker/docker-proxy-current -proto tcp -host-ip 0.0.0.0 -host-port 80 -container-ip 172.22.0.3 -container-port 8033 19216 4054 0 Nov13 ? 00:00:08 apache2 -DFOREGROUND

There are two things to note here. First, within the container, you’re only seeing the processes that the container runs. No systems, no docker daemons, etc. Only the apache2 and ps processes. From outside of the container, however, you see all of the processes running on the system, including those within the container. And, the PIDs listed inside if the container are different from those outside of the container. In this example, PID 4054 outside of the container would appear to map to PID 1 inside of the container. This provides a layer of security such that running a process inside of a container can only interact with other processes running in the container. And if you kill process 1 inside of a container, the entire container comes to a screeching halt, much as if you kill process 1 on a linux host.

PID namespaces are only one of the namespaces that Docker makes use of. There are also NET, IPC, MNT, UTS, and User namespaces, though User namespaces are disabled by default. Briefly, these namespaces provide the following:

- NET

- Isolates a network stack for use within the container. Network stacks can, and typically are, shared between containers.

- IPC

- Provides isolated Inter-Process Communications within a container, allowing a container to use features such as shared memory while keeping the communication isolated within the container.

- MNT

- Allows mount points to be isolated, preventing new mount points from being added to the host system.

- UTS

- Allows different host and domains names to be presented to containers

- User

- Allows a mapping of users and groups with container to the host system, thereby preventing a root user within a container from running as pid 1 outside of the container.

The second piece of black magic used is Control Groups or cgroups. Cgroups isolates resource usage for a process. Where Namespaces creates a localized view of resources for a process, cgroups creates a limited pool of resources for a process. For instance, you can assign specific CPU, Memory, and Disk I/O limits to a container. With a cgroup is assigned, the process cannot exceed the limits put on it, thereby preventing processes from “running away” and exhausting system resources. Instead, the process either deals with the lower resource limits, or crashes.

By themselves, these features can be a bit daunting to set up for each process or group of processes. Docker conveniently packages this up, making deployment as simple as a docker run command. Combined with the packaging of a Docker container (which I’ll cover in a future post), Docker becomes a great way to deploy software in a reproducible, secure manner.